February 15th, 2026

13 Best Data Quality Management Tools for Small Companies

By Drew Hahn · 28 min read

13 Best data quality management tools for small companies: At a glance

Data quality problems show up in two ways for small companies: through automated monitoring that flags issues early, or during analysis when answers reveal something’s wrong. These 13 platforms range from analysis-first tools to heavier validation and governance systems you might grow into.

Here's how they compare:

Tool | Best For | Starting price | Key |

|---|---|---|---|

Removing duplicate records from small datasets | $40/month for up to 30,000 CRM records | No-code deduplication for CSV and list-based data | |

SQL-based transformations | $100/user/month, with a free Developer tier | Define and version data quality tests alongside SQL models | |

Automated data quality checks on existing pipelines | Write data quality checks using simple, readable rules | ||

Python-based validation | Custom pricing, with a free Developer tier | Code-based testing with detailed setup guides | |

Address, contact, and identity data validation | Pay-as-you-go ($40/10,000 credits) | High-accuracy validation APIs with pay-as-you-go pricing | |

Lightweight data quality awareness during analysis | Highlights missing, inconsistent, or misjoined data while answering questions | ||

Collaborative data quality | Teams can flag and discuss data issues together | ||

Microsoft ecosystem integration | Discovers, classifies, and tracks data across Azure services | ||

Lightweight documentation | $18,000/year for 3 editors | Shows where data comes from without a complex setup | |

Automated anomaly detection | Alerts you when metrics change unexpectedly | ||

Automated data profiling | Scans your data and suggests quality rules automatically | ||

Governance and quality combined | Manage data policies and quality rules in one system | ||

Enterprise-scale data quality | Runs complex data quality rules at enterprise scale |

1. Dedupely: Best for removing duplicate records from small datasets

What it does: Dedupely is a no-code deduplication tool that finds and merges duplicate records in your CRM, spreadsheets, or contact lists. It uses fuzzy matching to catch duplicates even when names, emails, or addresses don't match exactly.

Who it's for: Small businesses managing customer data in spreadsheets or basic CRMs who need to clean up duplicates without technical skills.

I uploaded a CSV with 5,000 customer records into Dedupely, including obvious duplicates and harder cases where people used different email addresses or slight name variations. The tool flagged potential matches and let me review them before merging.

The matching logic caught cases I would’ve missed manually. “John Smith” and “J. Smith” at the same company were flagged as potential duplicates, along with contacts that used personal and work emails in separate rows. I could adjust the matching sensitivity to be stricter or more lenient, depending on how confident I wanted to be.

Key features

Fuzzy matching: Catches duplicates even when data doesn't match exactly

CSV and CRM support: Works with uploaded files and select CRM platforms

Merge preview: Review matches before combining records

Pros

No technical skills needed to find and merge duplicates

Adjustable matching sensitivity for different use cases

Handles common variations in names, emails, and addresses

Cons

Record limits make it unsuitable for large databases

Focused only on deduplication rather than broader data quality issues

Pricing

Dedupely starts at $40 per month for up to 30,000 CRM records.

Bottom line

2. dbt: Best for SQL-based transformations

What it does: dbt (data build tool) is a transformation framework that lets you write SQL queries to clean and structure data in your warehouse. It includes built-in testing capabilities so you can define data quality checks that run automatically whenever your transformations execute.

Who it's for: Data analysts and engineers who work in SQL and want to version-control their data transformations alongside quality tests.

I tested dbt by building a transformation pipeline that cleaned sample sales data and ran validation tests on the results. The setup requires connecting to a data warehouse and writing SQL models that transform raw data into clean tables. Tests run automatically as part of the transformation process, catching issues before bad data reaches reports.

The version control integration stood out during my testing. Every change to a SQL model or test gets tracked in Git, so you can see who modified what and roll back if something breaks. This matters when multiple people work on the same data pipelines.

The free Developer tier includes core transformation and testing features. Teams that need deployment scheduling or role-based permissions will need paid tiers.

Key features

SQL-based transformations: Write data cleaning logic in standard SQL rather than Python or proprietary languages

Automated testing: Define quality checks that run with every transformation

Version control: Track all changes to models and tests through Git integration

Pros

Works with SQL skills most data teams already have

Tests run automatically as part of transformation workflows

Git integration provides audit trail for all changes

Cons

Requires data warehouse infrastructure to run

Per-user pricing can get expensive for growing teams

Pricing

Bottom line

3. Soda: Best for automated data quality checks on existing pipelines

What it does: Soda is a data quality platform that runs automated checks on data pipelines and alerts teams when quality issues appear. You write checks using simple, readable syntax that defines what your data should look like, and Soda runs those checks automatically on a schedule.

Who it's for: Data teams that want automated quality monitoring without building custom validation code from scratch.

Soda impressed me with how quickly I could set up checks compared to code-heavy alternatives. The platform uses a YAML-based syntax called SodaCL that reads almost like natural language. I wrote checks like "customer_id should never be null" or "revenue values must be positive" without needing to understand complex Python libraries or SQL testing frameworks.

The monitoring dashboard shows which checks passed or failed across all your data sources. When a check fails, Soda can send alerts to Slack, email, or incident management tools so teams know about problems immediately, rather than discovering them days later when reports look wrong.Key features

Readable check syntax: Write data quality rules without complex code

Automated monitoring: Checks run on schedule and alert teams when failures occur

Multi-source support: Monitor quality across different databases and warehouses

Pros

Faster setup than building custom validation code

Alerts integrate with existing team communication tools

Supports major data warehouses and databases

Cons

Higher starting price than open-source alternatives

Requires existing data pipeline infrastructure

Pricing

Bottom line

4. Great Expectations: Best for Python-based validation

What it does: Great Expectations is an open-source data validation framework that lets you define expectations for your data and test them automatically. It runs checks on datasets to catch issues like missing values, incorrect data types, or values outside expected ranges.

Who it's for: Teams with Python developers who want to build automated data quality tests into their pipelines.

Great Expectations performed well in my testing once I got past the initial setup. The framework uses Python code to define what your data should look like, then validates incoming data against those rules. You write expectations like "column values must be between 0 and 100" or "this column should never be null," and the tool checks every batch of data that comes through.

The validation results appear in automatically generated documentation that shows which tests passed and which failed. I appreciated how this creates a shared reference point for teams. Data engineers can see exactly what broke, and business users can check data quality status without reading code.

The free Developer tier worked fine for my testing on smaller datasets. Larger teams that need features like data profiling, automated expectation generation, or centralized validation management will likely need to upgrade to a paid plan.

Key features

Expectation library: Pre-built validation rules for common data quality checks

Data docs: Auto-generated documentation showing test results and data profiles

Pipeline integration: Runs validation checks inside Python-based data pipelines

Pros

Open-source with detailed community documentation

Works with common data tools like pandas, Spark, and SQL databases

Creates documentation automatically from test results

Cons

Requires Python knowledge to write and maintain expectations

Initial setup takes time compared to no-code tools

Pricing

Bottom line

5. Melissa Data Quality Suite: Best for address, contact, and identity data validation

What it does: Melissa Data Quality Suite validates and standardizes customer contact information through APIs. It verifies addresses against postal databases, checks phone number formats, validates email addresses, and confirms identity data to reduce errors in customer records.

Who it's for: Businesses that collect customer data and need to verify addresses, phone numbers, and emails before storing them.

I tested Melissa Data Quality Suite by running sample customer records through its address verification API.

The platform caught formatting issues I likely would have missed, such as apartments written as “Apt” instead of “#” or street abbreviations that didn’t match USPS standards. It also returned standardized addresses that matched official postal formats. This is important for businesses that ship products or send mail.

The phone validation API checked whether numbers were active and identified whether they were mobile or landline. It also flagged numbers listed on do-not-call registries. Email verification went beyond simple syntax checks and confirmed whether addresses could receive messages without bouncing, helping to reduce wasted marketing sends to invalid contacts.Key features

Address standardization: Corrects formatting and validates against postal databases

Contact verification: Checks phone numbers and email addresses are valid and active

Identity validation: Confirms personal information matches official records

Pros

High accuracy rates for address and contact validation

Pay-as-you-go pricing fits unpredictable usage patterns

API integration works with existing data collection forms

Cons

Focused specifically on contact data rather than broader quality issues

Costs increase with validation volume

Pricing

Bottom line

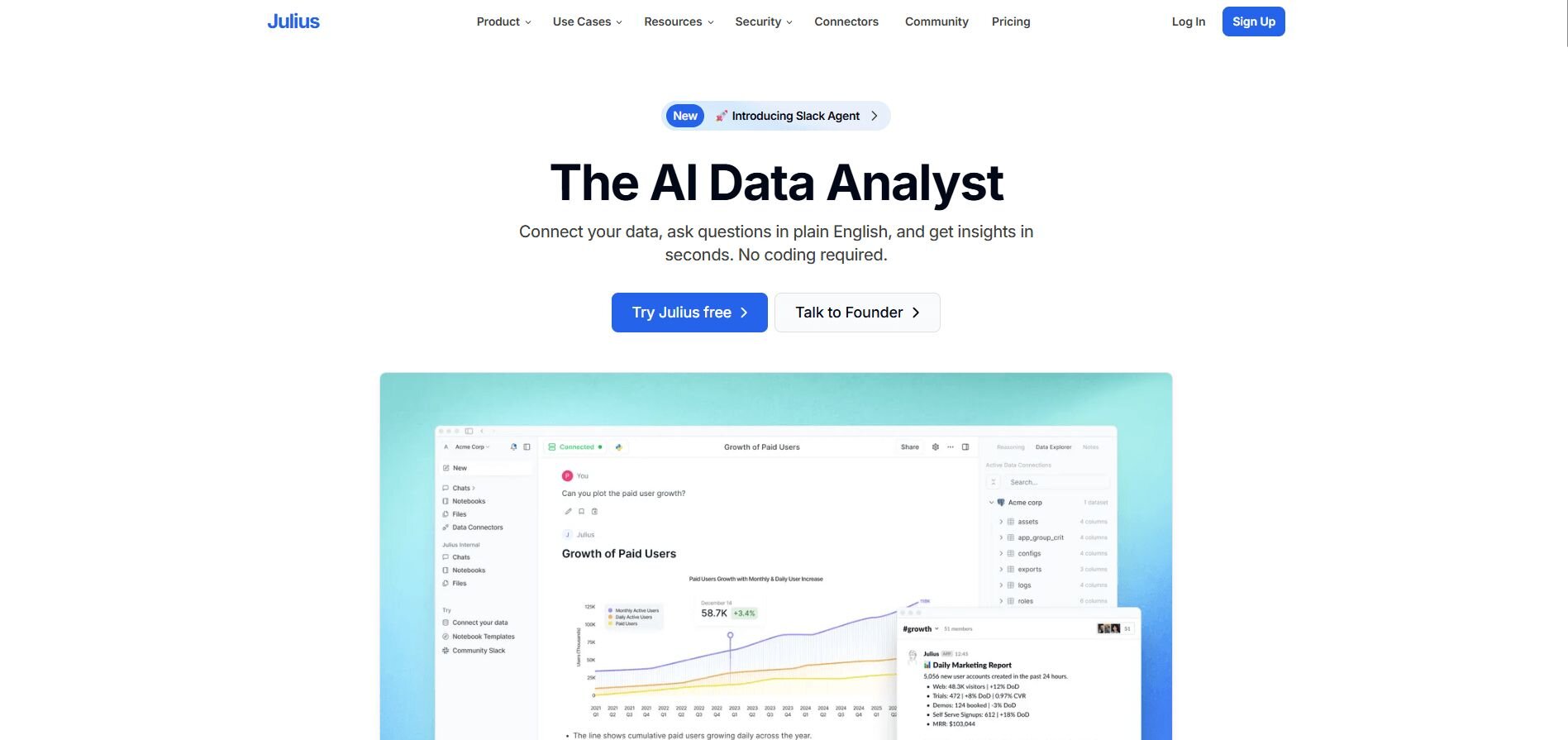

6. Julius: Best for lightweight data quality awareness during analysis

What it does: Julius is an AI-powered data analysis platform. Instead of running automated validation rules, it helps you spot data quality issues during regular analysis. It connects to your databases, generates queries from natural language, and learns your data structure over time. It’s simpler than a full monitoring system, but still relies on human review.

Who it's for: Small teams that need trustworthy answers but don’t have the time or resources to maintain a full data quality monitoring stack.

We built Julius around the idea that many teams don’t need complex validation pipelines. They need to catch issues while answering business questions. Rather than defining rules upfront, Julius makes unusual results easier to notice during analysis.

The platform builds an understanding of table relationships and column meanings through its semantic layer. Repeated questions about revenue, customers, or operational metrics stay consistent, which makes unexpected changes easier to identify.

Notebooks let you schedule saved analyses and send consistent reports to Slack or email. The same calculations run each time, which reduces ongoing setup and maintenance. This is simpler than managing rule-based systems, but it doesn’t replace automated pipeline monitoring.

Tip: If analysis reveals duplicates or formatting issues, this guide explains how to clean data in Julius before re-running your queries.

Key features

Natural language queries: Ask questions and get charts without writing SQL

Connected data sources: Links to Postgres, BigQuery, Snowflake, Google Sheets, and more

Reusable Notebooks: Save analyses that refresh automatically with updated data

Scheduled reporting: Send results to Slack or email on a set schedule

Semantic learning: Remembers database relationships and column meanings with each query

Pros

Helps you spot data issues during analysis without building validation rules

Supports both quick chat analysis and saved Notebook workflows

Database understanding improves with use

Cons

Built for business analysis rather than advanced statistical modeling

Works best with clean, consistently formatted data

Pricing

Bottom line

Special mentions

These tools address more specialized data quality needs or become relevant as teams scale. Here are 7 more tools worth considering:

Atlan: Atlan combines data cataloging with quality tracking in a collaborative workspace. Teams can document datasets, flag quality issues, and discuss problems through Slack integration. It works well for growing teams that need multiple people coordinating on data quality work.

Microsoft Purview: Microsoft Purview, formerly known as Azure Purview, discovers, classifies, and tracks data across Azure services automatically. It maps data lineage and applies quality rules within Microsoft’s ecosystem. Companies already using Azure databases and warehouses get native integration without connecting third-party tools.

Dataedo: Dataedo documents database structures and maps data lineage with minimal setup requirements. It shows where data originates and how it flows through systems without building complex tracking infrastructure. The documentation interface helps teams understand data relationships.

Monte Carlo: Monte Carlo monitors data pipelines for anomalies and alerts teams when metrics change unexpectedly. It uses machine learning to establish baseline patterns and flag deviations automatically. This approach flags unexpected changes without requiring explicit rules for every metric.

Ataccama One: Ataccama One profiles data automatically and suggests quality rules based on patterns it detects. AI-driven recommendations reduce manual rule creation for large datasets. The platform handles enterprise-scale validation across complex systems.

Collibra: Collibra combines data governance with quality management in one platform. It handles data policies, access controls, and validation rules together. Organizations that need formal governance alongside quality checks can manage both requirements without separate tools.

Informatica: Informatica runs complex data quality rules at enterprise scale across multiple systems. It handles large-scale transformations, validation, and cleansing for organizations with extensive data infrastructure. The platform targets enterprises with mature data operations.

How I tested data quality tools for small companies

I connected each platform to test databases containing mock customer records, sales transactions, and product data with intentional quality problems built in. The goal was to see which tools caught real issues business teams face, not just theoretical edge cases.

Here's what I evaluated:

Problem detection: I seeded datasets with duplicate customers, null values in required fields, and formatting inconsistencies. The strongest tools flagged these immediately, but others required multiple configuration attempts before catching obvious errors.

Usability for non-technical users: I tested whether someone without SQL knowledge could set up checks and interpret results. Platforms that required extended documentation reading before running a single validation didn’t meet small business needs.

Integration effort: Connecting to Postgres, Snowflake, or CSV files should take minutes, not hours. I tracked the actual setup time from account creation to the first successful quality check.

Result clarity: When checks failed, I needed to know which records had problems and why. Vague error messages that said "validation failed" without specifics created more work than they saved.

Maintenance requirements: I ran the same checks weekly to see which platforms stayed stable and which required constant adjustments. Small teams can't spend every Monday fixing broken validation rules.

Cost predictability: I calculated what each tool would cost at different data volumes and team sizes. Platforms with surprise charges for basic features or unclear pricing structures got marked down.

Which data quality management tool should you choose?

The right data quality management tool depends on your team's technical skills, the data problems you face most often, and whether you need automated monitoring or analysis-based checks.

Choose:

Dedupely if duplicate customer records are the main issue and you need no-code cleanup for contact lists.

dbt if you already transform data using SQL and want quality tests version-controlled with transformation logic.

Soda if you need automated monitoring that alerts your team when checks fail across multiple data sources.

Great Expectations if your team works in Python and wants automated validation tests that run inside data pipelines.

Melissa Data Quality Suite if you collect customer addresses, phone numbers, or emails and need to verify accuracy before storing them.

Julius if you want to catch data quality issues while answering business questions and need your database context to improve with use.

Atlan if multiple team members need to coordinate on data quality and want discussions tied to specific datasets.

Microsoft Purview if your data lives in Azure services and you want native discovery and quality tracking.

Dataedo if you need clear documentation of where data comes from and how it flows through your systems.

Monte Carlo if you want anomaly detection that flags unexpected metric changes without defining explicit rules.

Ataccama One if you’re growing into enterprise-scale operations and want AI-assisted quality rule suggestions.

Collibra if you need to manage governance policies and quality validation in a single platform.

Informatica if you run complex, multi-system data operations at enterprise scale and need comprehensive transformation and cleansing.

My final verdict

I found that Great Expectations and dbt work well for teams with Python or SQL skills who want code-based validation, while Soda and Monte Carlo focus on automated monitoring with alerts. Dedupely handles the problem of duplicate records, and Melissa validates contact information. Atlan and Collibra target teams coordinating quality work across multiple people or managing formal governance.

Julius approaches data quality differently by surfacing problems during analysis rather than through pre-built validation rules. You catch issues when query results look wrong, and the platform learns your database structure, so repeated questions stay consistent and easier to trust. I’ve found that this matters for small teams that need trustworthy numbers without building testing infrastructure.

Want to catch data quality issues without setting up a monitoring system? Try Julius

Most data quality management tools for small companies focus on automated validation and alerting. Julius takes a lighter approach by helping teams spot issues during everyday analysis. You can ask questions in plain English, connect to your database, and notice missing values or inconsistent metrics as you work.

Here’s how Julius helps:

Smarter over time with the Learning Sub Agent: Julius's Learning Sub Agent learns your database structure, table relationships, and column meanings as you use it. With each query on connected data, it gets better at finding the right information and delivering faster, more accurate answers without manual configuration.

Direct connections: Link databases like Postgres, Snowflake, and BigQuery, or integrate with Google Ads and other business tools. You can also upload CSV or Excel files. Your analysis reflects live data, so you’re less likely to rely on outdated spreadsheets.

Catch outliers early: Julius highlights suspicious values and metrics that throw off your results, so you can make confident business decisions based on clean and trustworthy data.

Quick single-metric checks: Ask for an average, spread, or distribution, and Julius shows you the numbers with an easy-to-read chart.

Built-in visualization: Get histograms, box plots, and bar charts on the spot instead of jumping into another tool to build them.

Recurring summaries: Schedule analyses like weekly revenue or delivery time at the 95th percentile and receive them automatically by email or Slack.

One-click sharing: Turn a thread of analysis into a PDF report you can pass along without extra formatting.

Ready to spot data quality problems while answering business questions? Try Julius for free today.